Joomla Benchmarks: LiteSpeed vs. Apache

The LSCache for Joomla Module is one of our newest cache plugins. We recently teamed-up with Svend Gundestrup to generate a series of new benchmarks using one of his Joomla sites in Denmark. Svend takes a scientific approach to benchmarking, which we’ll discuss in detail after we show you the test results.

We should mention that Svend performed all tests on his own, without any compensation from LiteSpeed, and using equipment he purchased himself.

Benchmarks

Return Load Tests were run using four different methods:

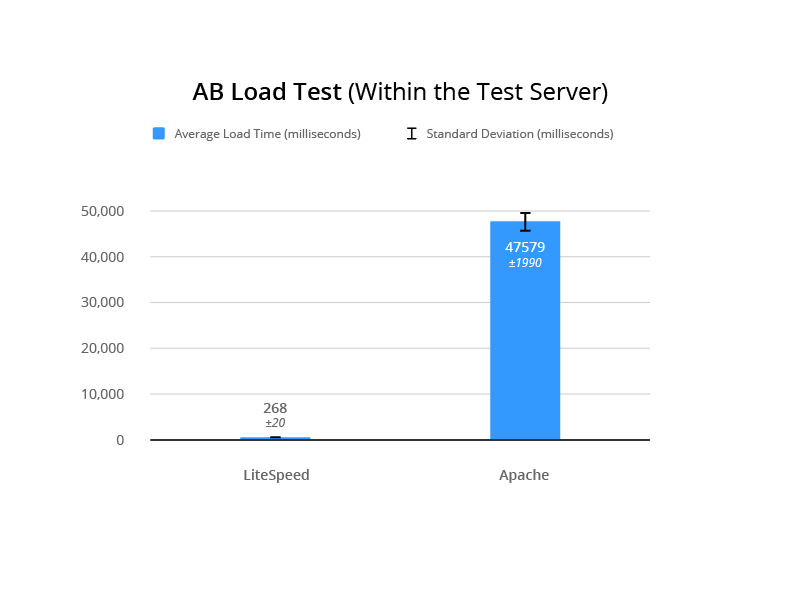

- AB from within the test server

- AB from an external server in the same region

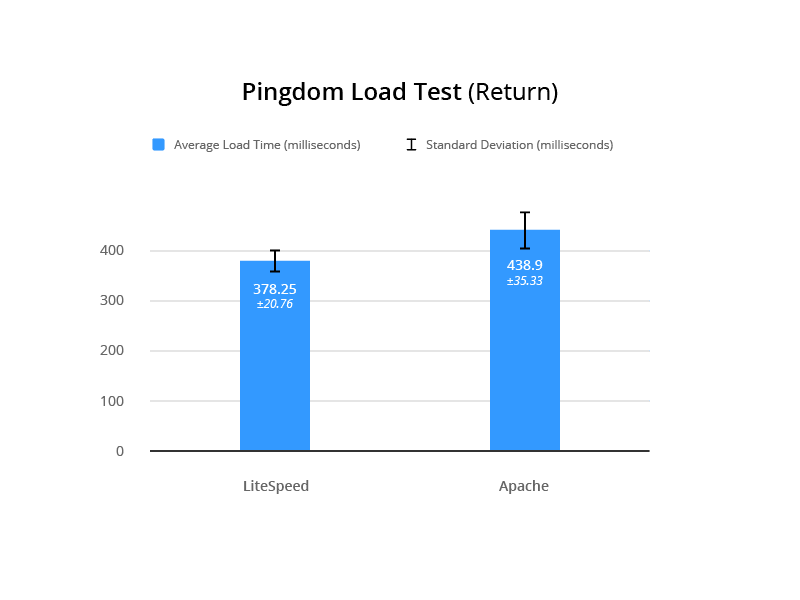

- Pingdom

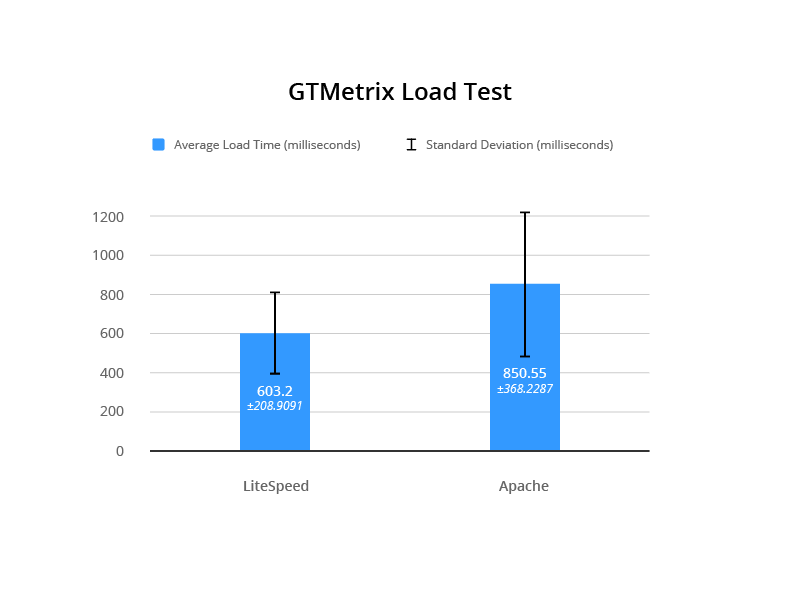

- GTMetrix

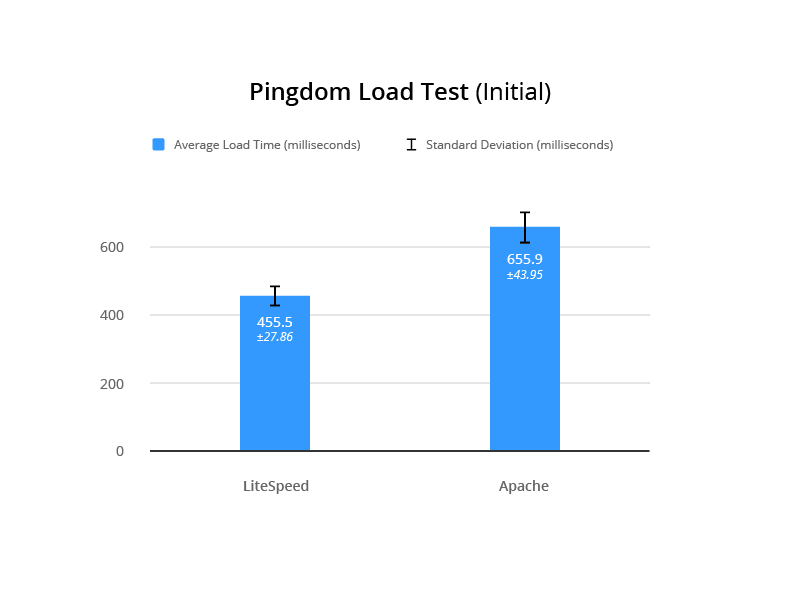

Additionally, one set of Initial Load Tests was performed:

- Pingdom

Each type of test was run 20 times per server, making a total of 100 benchmarks for each server+cache setup. We’ll share the details in the Specifics of Testing section below.

The goal was to compare performance between the following servers and cache solutions on a highly optimized Joomla website in a production environment, with a valid SSL certificate. We tested the following two servers+cache solutions:

- Apache event MPM + php-FPM + Memcached + JotCache (memcached)

- LiteSpeed Web Server + LSCache for Joomla

Each results graph shows the average speed in milliseconds. Additionally, Svend has included some figures that help to evaluate the results from a statistical perspective: standard deviation (SD), F-test, and T-test. We’ll talk more about what those numbers mean in the Definitions section below.

AB Test #1 (within the test server)

This AB load test was performed on the test server itself. The following command was used:

ab -k -n 1000 -c 100 -H "Accept-Encoding: gzip,deflate" -l https://www.lite.lungekursus.dk/

T-Test 6.12E-49

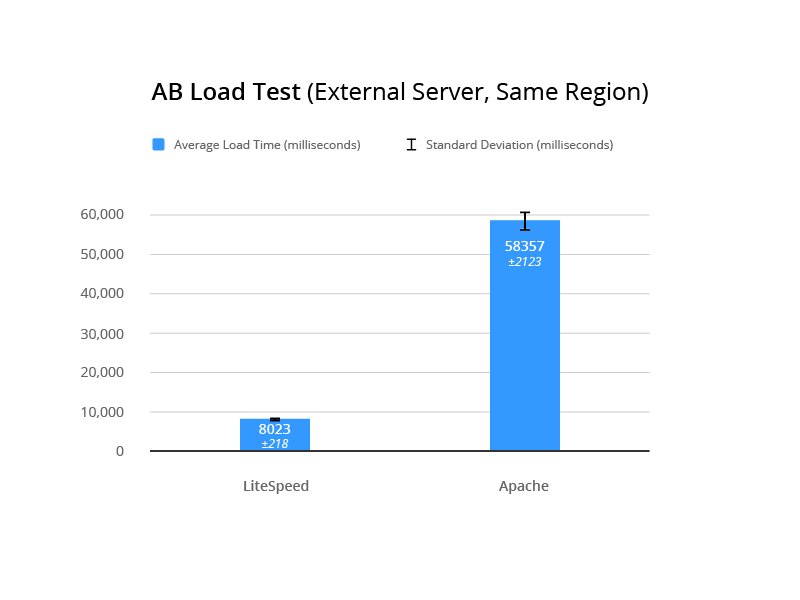

AB Test #2 (external server, same region)

This AB load test was performed from a server external to the test server. The following command was used:

ab -k -n 1000 -c 100 -H "Accept-Encoding: gzip,deflate" -l https://www.lite.lungekursus.dk/

Pingdom Speed Test

This test was run from Sweden to the test server in Amsterdam.

For the Initial Load Testing, the following command was run:

sudo /etc/init.d/php7.2-fpm restart && sudo /etc/init.d/apache2 restart

For each iteration, Svend cleared the cache, restarted the server from the command line, and then verified that the cache was missed to prove that caching would have no effect on the results.

F-Test 0.05369249473

T-Test 7.63E-20

For the Return Load Testing, the server was restarted once, all caching was cleared, and Svend loaded the website to verify a cache hit. The server was not reset in between tests.

F-Test 0.02524102277

T-Test 4.05E-08

GTmetrix

Measuring time to fully load in the UK from the test server in Amsterdam.

F-Test 0.01739569946

T-Test 6.40E-03

Test Environment

Common To Both

Digital Ocean server: 4GB, 80SSD, 2vCPU, based in Amsterdam, with a target audience in Denmark

Config Server Firewall, IDS, Tripwire, MySQL, Fail2Ban

Joomla 3.8.7, JCH Optimizer Pro, Joomla Admin (with security enabled), JA Builder template

Apache

Apache 2.4 with event MPM running

PHP FPM 7.2.4.1

Memcached

JotCache (Memcached)

LiteSpeed

LiteSpeed Web Server 5.2.7

LSCache for Joomla 1.1.1

PHP 7.2.2-2+Xenial

Specifics of Testing

Each test was run as follows:

service lsws stopps -ef | grep httpd(to make sure LSWS is not running)service apache2 startps -ef | grep apache2(to make sure Apache is running)- Enable JotCache (all plugins and dependencies)

- Run 20 iterations of each test

service apache2 stopservice lsws start- Disable JotCache (and associated plugins)

- Enable LiteSpeed Cache

- Look for

x-litespeed-cache: hit/missresponse header to ensure LSCache is working. - Run 20 iterations of each test

Note: the .htaccess files remained unchanged during the testing

Definitions

Return Load Test: Load testing where the first load is discarded. This type of testing makes sense for evaluating a page cache, as the cache is not primed until the first page load triggers it.

Initial Load Test: Load testing where the server is restarted after each test. The purpose is to test the initial first page load of the server without influence from memcached or lscache. It’s essentially the opposite of Return Load Testing, and measures the performance of the server and PHP.

Standard Deviation: Abbreviated in our charts as SD, standard deviation measures the variation among individual test results from the mean result. A smaller standard deviation is preferable because it indicates some consistency in the test results. A higher SD indicates that the server speed is unstable. (Wikipedia)

F-Test: Compares two sets of results and returns a number (p-value). If the p-value is high, there is not a significant difference between the two sets of results. So, for our testing, we want to see a low p-value, indicating that there is indeed a significant difference between the performance of the two servers. (Wikipedia | Google Sheets)

T-Test: Determines if two sets of results are significantly different from each other. There are different types of T Tests, and which one you use is determined by the p-value returned by the f-test. We are looking for a low t-test value to indicate a strong probability that LiteSpeed is faster than Apache. (Wikipedia | Google Sheets)

Why Standard Deviation?

Svend performed all of these tests and made all of the calculations. He feels it is important to take a proper, statistically-sound approach to benchmarking. This approach involves comparing speed, loading, and repeated loading on a real website and a real server that handles IDS, security, and so on in an actual production environment.

For each server tested, Svend calculated the standard deviation of the results. The SD indicates the stability of the test results. If you have a low SD, you are essentially saying “this server performs consistently in these tests.” A high SD means that the results for that server are erratic and inconsistent. In these tests, the SD calculated for LiteSpeed results was always much lower than that calculated for Apache results, leading to the conclusion that LiteSpeed Web Server is the more consistently performing server.

Why F Tests and T Tests?

Being more consistent doesn’t necessarily make it a better performer, however. And so Svend ran f-tests on most of the datasets above. The f-tests generated a p-value, which could be used to determine whether there was truly a significant difference between the two servers’ benchmark results. In every case, the p-value indicated that the test results of the two servers were significantly different from each other.

Now that he knew that there was definitely a difference, he needed to calculate the probability that one server would outperform the other. He ran t-tests on the dataset. The tests returned low values in all cases, which indicated a strong possibility that LiteSpeed is faster than Apache.

Why Follow This Process?

You might be wondering if all of this is necessary. After all, you can simply look at the data and see that LiteSpeed’s numbers are always better than Apache’s. While that might be true, the statistical approach gives you a way to scientifically quantify the significance of the differences between the two servers.

According to Svend, “I am tired of seeing ‘we did these 5 tests, on a completely blank system, that was not optimized for the system or the website prior to testing.’ It’s like testing a placebo to treat pneumonia. No wonder the antibiotics are working. It’s about testing and comparing, to find if the new antibiotic is better than the old (standard treatment). You need to do a lot more than 5 tests, both to be sure the results are correct, but also to see how much the test results differ over time.”

These tests definitively show:

- LiteSpeed is faster than Apache.

- Speed varies much less with LiteSpeed than with Apache, meaning it is consistently faster.

- Even in the initial load speed (where cache is not a factor) LiteSpeed outperforms Apache.

Care to have a look at the original test results? Check out the Google Sheet.

About Svend

Svend Gundestrup is an MD in his last year to become a specialist in Pulmonology. He runs a specialized website for education within the pulmonology field in Denmark, catering to Danish pulmonologists and registered nurses. In addition to his medical duties, Svend runs a consultant firm that specializes in IT, IT security and IT development, and also EPIC healthcare in Danish Hospitals. His website audience requires speed, stability, and interoperability between desktop, laptop, tablet, and mobile phone.

Svend is married with two kids, and has been playing with and building computers since the days of the 386 with coprocessor. Fluent in linux, OSX, and Windows, Svend has been creating websites as co-projects for many years, working with mambo and later Joomla.

—

This blog post was a team effort. Thanks to Svend for executing the tests, providing an explanation of his process, and helping me proofread. And thanks to Mark Zou for the helpful graphics illustrating each test.

–Lisa

Want to try LSCache for Joomla for yourself? Download the module.

Comments