NFtR: QUIC Working Group Day Three

Thursday, October 5th. Seattle, WA.

Thursday, October 5th. Seattle, WA.

This morning, I arrived at 351 Elliott Ave around 8:40 am, which was about 20 minutes too early. I plopped down in the lobby and chatted a bit with someone whom I took for a manager of some sort. He was in charge of a large model of the new F5 Networks building. The base of the model had some chips in it that needed to be repaired and two repairmen came to take care of it. The actual building is currently under construction and should be ready by 2019. Boasting 44 floors and standing 660 ft. tall, the new F5 Networks tower is a bona fide skyscraper! F5 is growing and Seattle is growing with it.

The meeting began at 9:33. After a few short preliminaries (note well, etc), Mark Nottingham (Fastly) — the working group’s co-chair — announced that Jeff Pinner (Lyft) and Roberto Peon (Facebook) have been nominated to lead a closed design team to come up with a QUIC API document.

Then Lars Eggert (NetApp) — the other co-chair — departed from his usual MO to deliver a warning to the participants:

There are fifty people in this room and half of you haven’t said a thing in two days! This is not good. The committee keeps on arranging venues that can accommodate this many people, relying on people like Martin [Duke (F5 Networks)] to have their companies host us.

It is hard not to agree with Lars, even though he sounded a bit apologetic in the end: Is there a good case for mute participants? Since they do not participate, are they even participants? Perhaps they participate during breaks? But those aren’t an official part of the conference. Are they?

It turns out, the breaks are a crucial part of moving the standardization process forward. People use the breaks at conferences like this one to discuss things face-to-face and to mingle. In our case, however, the discussions during breaks were officially sanctioned by the chairs for people to hash out their technical issues. There are two problems with this:

- The working group’s discussion is supposed to be recorded. There are three official ways to discuss the IETF QUIC drafts:

- Mailing list;

- GitHub artifacts — issues, PRs, etc; and

- Conferences/meetings

The first two are easy: the medium is the record. The meetings have a scribe who is to write everything down. These are not empty words: yesterday’s meeting was stalled while we waited for someone to volunteer to be the scribe.

Yet these rules are suspended for discussions during breaks: the discussions are not recorded.

- The remote participants cannot participate. Already second-class citizens, they have no ability to take part in discussions during the breaks: Even if the microphone stays on, there are so many groups of people talking, that it is just a din to those listening online.

On the other hand, these break-based discussions are quite productive. I do not know what, or whether, we are to do anything about this; I am just stating the facts.

Mark Nottingham then noted that there is a different dynamic when there are twenty people in the room versus fifty people:

This is not to say that fifty is bad, but you got to participate… In addition, I must note that this group is not very diverse, especially with regards to gender. I would love to see that change.

Speaking of representation: today, when the sign-in sheet was being passed around, I had the presence of mind to glance over the preceding entries. There were names of several companies that I had not heard mentioned at the meeting. Below I publish the list of attending companies and organizations that I have collected:

- F5 Networks

- ACLU

- Adobe

- Akamai

- Apple

- AT&T

- BBC

- Cloudflare

- Deutsche Telekom

- Fastly

- Huawei

- LiteSpeed Technologies

- Lyft

- Microsoft

- Mozilla

- NetApp

- Symantec

- Verizon

Around 9:45 am, Ian Swett (Google) began his ACKs and Recovery presentation. The first slide had five QUIC ACK Principles which Ian introduced as something that we can all agree upon. Predictably, disagreement instantly ensued. After some dispute regarding the ACK Principles, we got to the meat of the ACK discussion. Ian recounted how some ACK (mis)behaviors only manifest themselves when the network experiences “ridiculously” high packet loss — ca. 30%.

With regard to the ACK frame structure, it was decided to remove the timestamps section from the ACK until we understand better how we want to aid the congestion control. In addition, deciding which congestion control a connection is to employ should be part of connection negotiation. A separate working group is to make a proposal regarding the use of timestamps and ECN for congestion control.

Martin Duke raised an issue with the term “timestamp,” since it is not the common definition of “timestamp.” As for the ACK delay time format, Jeff had the following comment to offer (here I am, unfortunately, paraphrasing — the original wording was better):

The biggest problem with the float format is that it claims to be something that it isn’t, the spec is wrong.

This elicited a couple of boos (I am assuming from the authors of the format).

Around 11:20, Mike Bishop (Microsoft) discussed the pros and cons of QPACK, of which he is the author, and QCRAM proposed by Buck Krasic (Google). The discussion went on for some time, as both QPACK and QCRAM have some features that are desirable and others that are not — it is just that these sets are flipped in each: like the yin and yang. Jeff, always quick with a simile, posited that what we wanted was a unicorn of a compression mechanism. I asked Buck whether it is valuable to base the new algorithm on HPACK, for the latter is so simple, it can just be thrown out and a new one can be written. Buck replied that that was a secondary consideration: the primary reason for basing QCRAM on HPACK is to keep all the decision making in the encoder, which is one of the desirable features, and here the consensus was near unanimous. (There were many more questions and observations offered by the members — I am only listing mine because I am partial.)

Have you ever hummed? What, do you mean like a tune? – you may ask. No, “hum” as in going “HUMMMM…” Sort of like singing a mantra, but instead of OHMMM… you go HUMMM… You haven’t? I have! This is how (apparently!) IETF working groups vote for a motion. When Mike Bishop posed the first question to be hummed, I was so surprised that I missed the gist of the question completely. However, the second humming (hummer? humster? hummedura?) was stated, approximately, as follows: “Hum if you are in favor of saying that it’s OK to abandon any and all HPACK compatibility or design principles when coming up with the new HTTP/QUIC header compression mechanism.” I hummed ‘yes!’ I am a hamster! Hear me HUM! This hum was judged to be in the affirmative.

During the last break of the day, I talked with Alan Frindell (Facebook) about compression. He had made several insightful comments during the preceding discussion and the opportunity to pick his brain was too good to pass up. Turns out, they have been running modified HPACK code (part of proxygen) in production that supports QCRAM and QPACK! It’s hard to be better prepared than that!

Alan described Roberto’s two-stream framing proposal to me. I replied that yes, it is good, but we are digging into the transport! As long as we are digging into the transport, we could do the same with one stream: specify a byte sequence to be message separators and parse the messages out. Then you do not have to put them into the same packet. Further, I went on, the fact that QPACK uses extra streams is generally considered a strike against it. This is because we do not want to run out of streams. But why are there limitations to begin with? Why is the number of streams limited? (This is related to issue #796.) Had we an unlimited number of streams, the question of special framing for messages would not exist! Of course, lifting those restrictions would dig even deeper into QUIC…

Buck discussed the benefits of reduced HoL blocking as measured in Google’s A/B testing early this summer. Reducing head-of-line blocking is definitely something to strive for.

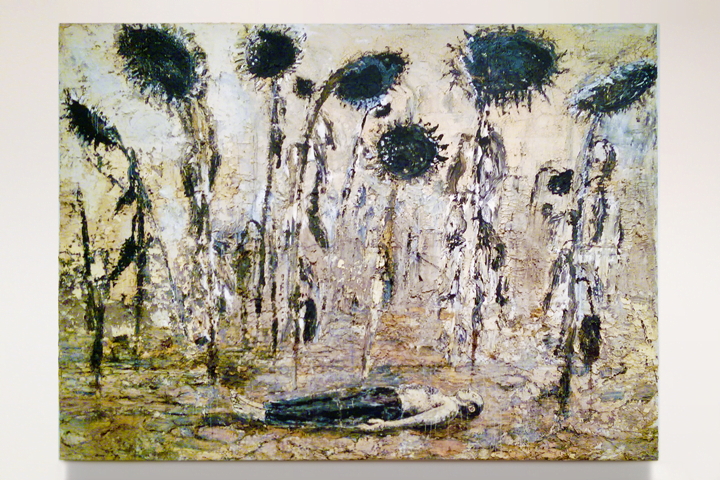

The meeting wrapped up at 3 pm and I caught Bus #24 to downtown. It was my plan all along to visit the Seattle Art Museum before going to the airport: SAM is open until 9 pm on Thursdays. Walking among works of art, I managed to forget QUIC for a little while. Centuries of civilization were speaking to me.

I only began to reflect upon the events of the past three days while definitely not enjoying the unappetizing dinner served at an airport restaurant. (It seems at the SeaTac airport they threw off all pretense: “Give us your money! Now, eat this nondescript mystery meat with some lettuce on it!”) The broiled crypto-chicken could not spoil my mood: I was happy.

The cast of characters in the wonderful play that is the QUIC standardization process is no longer just a bunch of email addresses and GitHub handles for me: I met Eric Rescorla, I argued with Buck Krasic, I shook Martin Thomson’s hand, I exchanged stories of QUIC’s deployment pitfalls with Jana Iyengar, I picked Alan Frindell’s brain. Now I know these (and other!) guys and, what’s more, I like them.

I gained valuable insight listening to the participants. Observations by Jeff Pinner, Martin Thomson (Mozilla), Roberto Peon, Alan Frindell, and Ian Swett offered me a new perspective on things. It is in human nature to cooperate (the very QUIC standardization process is a good example of this): people will share painfully learned lessons, ideas, problems, solutions — for free! One only has to listen. What a great deal!

Comments