Developer’s Corner: Head-of-Line Blocking Allows Attacks

Protocol designers underestimate

how badly people will implement

their designs.

– Adam Langley, Cryptographic Agility.

Abstract

Compression schemes that allow head-of-line blocking are targets for memory exhaustion attacks against the decoder. Detecting such an attack is not trivial. Without guidance, programmers are likely to produce vulnerable implementations. HoL blocking must either be prohibited at the protocol level or the attack mitigation mechanism must be specified explicitly in the compression specification. One way to avoid an attack is presented.

Introduction

In the previous article, we discussed how head-of-line blocking can cause priority inversion and vice versa. In the second part of the series, we will examine potential attacks made possible by head-of-line blocking.

Problem Statement

Head-of-line blocking causes the decoder to queue header blocks that cannot be decoded due to a missing dynamic entry. Under normal circumstances, this occurs because of packet loss or reordering. An adversary, however, may create this scenario artificially in order to get the decoder to queue data, thereby creating a denial-of-service attack.

Here, a simple recommendation that the decoder use a timer is insufficient. The decoder must decide how much data it is willing to buffer and for how long. In other words, it must be able to differentiate between an attack and a regular packet loss/reordering situation. An incorrect determination may mean allowing a server to be DoSed; err the other way, and a valid connection is aborted.

Sample Attack

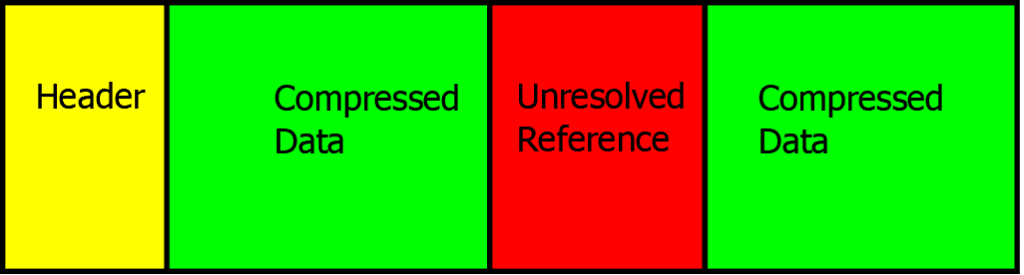

The attacker’s strategy is simple: send header blocks that contain a reference to a non-existent dynamic entry:

The decoder cannot resolve a reference and has to wait — buffering data. More and more header block data is sent. To maximize the effect of the attack, the adversary may use more than one — potentially all — available streams.

Mitigation Strategies

Strategy 1: Simple Timer

The decoder may associate a simple timer with each stalled header block. A simple timer has to be quite conservative in order to eliminate false positives. During that time, the attacker writes more data to each of the header blocks in a round-robin fashion, perhaps even resolving the blocking entry to reset the decoder timer while introducing a new unresolved entry at the same time. The amount of data queued at the decoder grows and grows and the server is eventually hosed.

Strategy 2: Adaptive Timer

A conservative timer means that the attacker has plenty of time to write more blocking data. An adaptive timer aims to reduce that window by using recent network conditions for determining a smaller timeout value. Besides RTT, it may need information such as the current CWND as well as callbacks fired when packet loss or timeout is detected. (This is digging pretty deep into the transport.) Even then, the adaptive timer must take into account that data could be lost multiple times as well as the possibility that the peer retransmits lost packets after it sends new data.

This approach lessens the impact (although without further analysis I cannot state to what degree), but does not solve the problem. In addition, the possibility of a false positive grows.

Strategy 3: Application-Aided Attack Detection

The decoder does not know whether the headers it receives make sense to the application or whether they are just fluff the attacker uses to blow up its blocked queue. The application knows about the headers it uses and thus could be called upon to evaluate the headers’ fluff level, sort of like an email SPAM filter.

This idea is not workable:

- While we (the IETF QUIC Working Group) do control the direction the transport layer takes, requiring all applications to provide extra logic to fight attacks is a non-starter.

- The attacker may simply target an application with special header blocks that would pass the checks.

Strategy 4: Change Application Interface

It is possible to change the decoder API to require the application to receive out-of-order headers, allowing the decoder to queue just the unresolved references. This is not good enough, either:

- The problem is just kicked upstairs — now the application must queue the data and decide when to abort the connection.

- If an indexed-name/literal header representation is used, with the index being unresolved, the decoder still has to queue the whole header field.

Strategy 5: Unread Data From Stream

One can imagine requiring the stream interface to be able to revert a read; alternatively, a peeking functionality may be provided. Either will allow the decoder to leverage the underlying stream’s queuing functionality instead of doing its own: when an unresolved entry is encountered, push back some data (or the whole header block). The advantage of using the stream for this is that the amount of data in the stream is limited by that stream’s maximum as well as the connection’s overall maximum.

The first downside of this approach is that unreading may not always be possible, as the application must take off data from streams so that the connection can proceed.

When it is possible, this strategy effectively exchanges a memory exhaustion attack for processor exhaustion attack. One can imagine an attacker now switching to feeding the decoder resolved entries and new data with unresolved entries, forcing it to read, decode, and push back the data.

In addition, it is unclear whether the QUIC standard will require the transport layer to provide such functionality. (Consider, for instance, the implications of deferring sending a WINDOW_UPDATE frame while waiting for the application to “commit” to a read.)

No Way to Detect An Attack

None of the mitigation strategies above help the decoder to detect an attack in time. An odd (for some value of “odd”) sequence of incoming streams, header blocks, and dynamic table updates may be a perfectly valid use case. An attack detection mechanism built into the decoder must be sure that it is not going to break applications. Thus, one implementation may do it well and another may do it poorly. Chances are good that it is the protocol that will take the blame. One could add: for a good reason.

What This Means

Section 7: Security Considerations of RFC 7541 (HPACK) is five pages long — a quarter of the whole text! (Sans preamble, table of contents, and appendices). Obviously, the security of the header compression mechanism has been addressed thoroughly in the past.

It is apparent that the compression mechanism that we (the Design Team) eventually present to the Working Group must either preclude this type of attack at the protocol level or offer a robust attack detection and mitigation that can pass a security review.

There are two ways to address this:

- Not allow head-of-line blocking in the final proposal.

- Agree that the final compression proposal

- limit HoL blocking in a quantifiable fashion and/or

- include a robust attack detection mechanism.

Limiting HoL blocking in a quantifiable fashion means that the compression mechanism guarantees that the amount of data that has to be queued at the decoder does not exceed some threshold.

A robust attack-detection mechanism implies that it is possible to implement using standard programming techniques. It would be unreasonable to require that every decoder implement a sophisticated attack detection logic. (This would also be against the QUIC WG mandate that the decoder be simple.) In addition, the detection code must be relatively inexpensive (memory, CPU) to operate as part of the decoder.

Potential Solution: Defer Reading

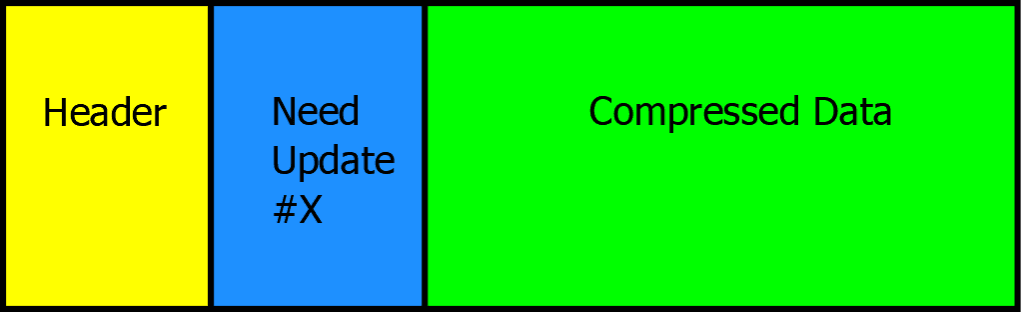

One potential solution to this is to prefix header block data with information that would tell the decoder whether the header block could be processed in one shot given current decoder state. For example:

If the Update #X has not yet arrived, the decoder does not read any more from this stream. This leaves the headers in the transport. (This takes Strategy 5 one step further.) Such approach limits the amount of memory required by the decoder.

The downside to this approach is that not draining the streams promptly may prevent the transport from operating efficiently.

Summary

Allowing HoL blocking and requiring the decoder to queue data and wait until it can be decoded is a denial-of-service attack vector. The simplest way to prevent such attack is to not allow HoL blocking at all. Deferring reading from affected streams is likely feasible but requires further analysis. GitHub issue #1011 was opened to track this.

Comments