Performance Comparison of QUIC with UDP and XDP

Goal: Determine whether any performance gain can be achieved with XDP UDP versus kernel UDP

HTTP/3 uses QUIC which in turn uses UDP (User Datagram Protocol) rather than TCP/IP for communications. XDP (eXpress Data Path) has been added recently to several operating systems to provide User Space applications performance comparable to direct kernel applications.

The goal of this blog is to determine if QUIC can gain significant enough performance to justify implementing XDP where it is available. Understanding performance gains usually requires detail so besides a simple test, a profiler will be used to see exactly where performance gains occur.

XDP can be run with hardware support or can be run entirely in software. The goal is to have an XDP program be usable in the widest possible range of computing environments. This is best accomplished by targeting virtual machines.

The Test Environment

LSQUIC

The QUIC engine used was the popular open-source LSQUIC library available from LiteSpeed Technologies. Only small modifications were made to the base library to support XDP. The XDP functionality was provided by a separate module, which implemented LSQUIC callbacks to send, receive, and allocate packets. Testing was performed using the http_server and http_client programs provided by LSQUIC, modified as necessary.

The client machine ran a released version of the http_client program from the LSQUIC library and was always the receiver. It was specified to use the Q046 QUIC protocol version.

The server machine ran the modified version of the http_server program from the LSQUIC library and was run with command line parameters to use either the native UDP or the XDP UDP. It ran in Interop mode, which is a mode of the program where data is transmitted without having to be read from disk.

Simple volume tests of 2GB were executed to show performance differences between native UDP and XDP UDP. A profiler was used to examine the effect of XDP UDP versus native UDP in the program to help identify specific bottlenecks.

Hardware and Software

Server machine:

- OS: Lubuntu v19.04

- CPU: Xeon E7 2.4 Ghz

- Host: Proxmox 6.1

- Host NIC: Intel X540-AT2 10Gb dual port NIC.

- VM type: KVM

- VM Memory: 8 GB

- VM NIC: virtio_net driver (it has specific performance improvements for XDP built in)

Client machine:

- OS: Lubuntu v19.04

- CPU: Xeon E5 a 3.6 Ghz

- VM type: None.

- Memory: 8 GB

- NIC: Intel X540-AT2 10Gb dual port NIC.

NOTE: A non-VM machine was chosen with a high speed data path so as to best simulate access to the wider internet in a controlled environment. Other works showing performance include this one, which shows runs simply tossing away or moving data without any real involvement. In contrast, we believe our comparison is the first where a real application could be used.

The Tests and Results

3 runs in a row were performed by the client machine pointing to the server machine which was configured to run in native UDP mode. Results reported were:

- Test 1: 111,036,768 bytes/sec

- Test 2: 98,336,240 bytes/sec

- Test 3: 96,860,121 bytes/sec

Resulting in an average of 102,077,709 bytes per second.

The server was restarted to run in XDP UDP mode and the client tests were repeated:

- Test 1: 152,644,777 bytes/sec

- Test 2: 147,426,653 bytes/sec

- Test 3: 138,277,563 bytes/sec

Resulting in an average of 146,116,331 bytes per second.

XDP Gets the Win

This is a 43% performance improvement by changing from native UDP sends to XDP UDP sends.

By examining the top program on all runs we could see that the server program was running at 100% or nearly so. On the client machine the CPU differences were significant. When running in XDP UDP mode the CPU utilization was close to or exceeding 70%. When running in native UDP mode the CPU utilization was less than 60%. This implies that the limit was in the CPU on the server system, which is where the UDP transmissions were occurring.

Flame Graph from perf Profiling

Since there’s such a significant difference in performance a profiler was used to see exactly where the program was spending its time. In particular the profiler perf was used as it provides great detail.

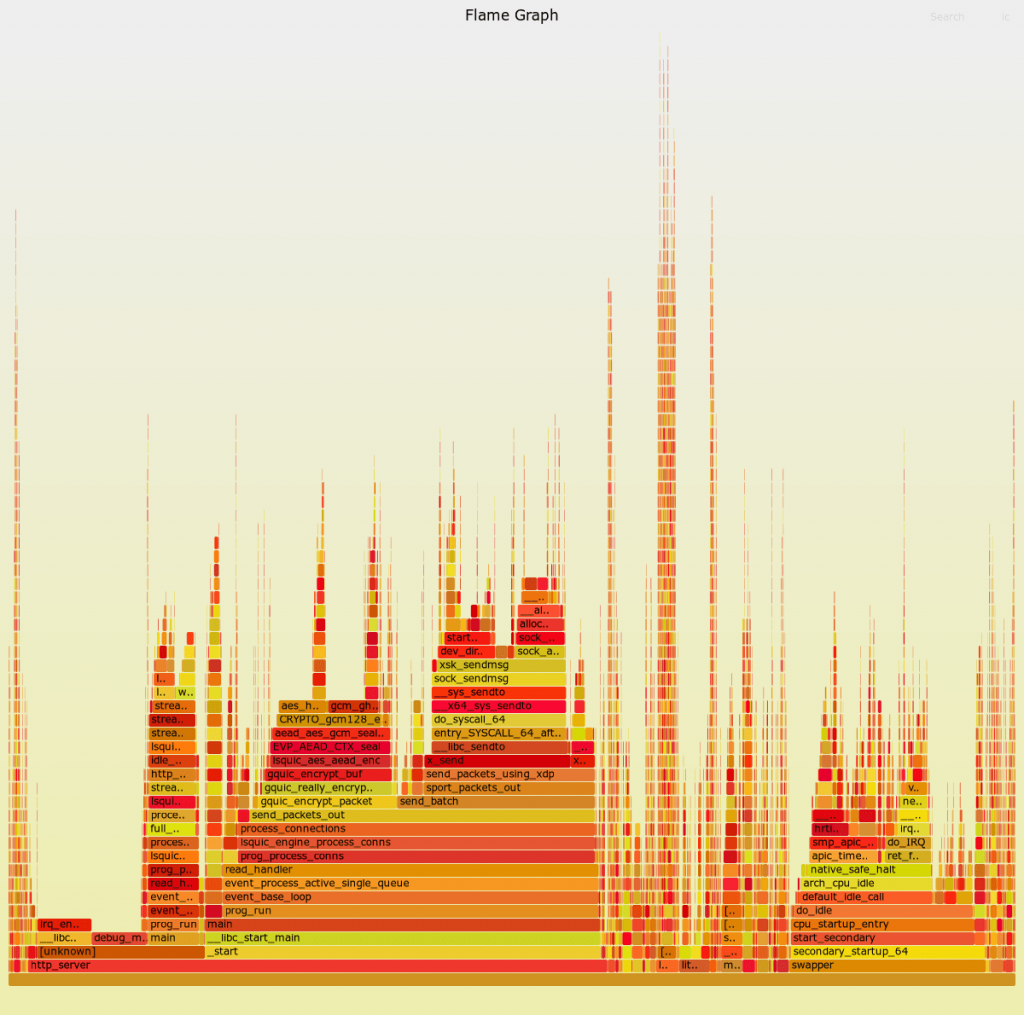

Flame Graph for native UDP

Processing the perf output with FlameGraph produces:

In a flamegraph the widest (horizontal) bars take the most CPU. As you go up the graph, you go deeper into the call stack. At the bottom is http_server. As you go up, you go into the program. The bar stays very wide until send_packets_out, an LSQUIC function that has two expensive children, gquic_encrypt_packet and send_batch, the latter being the more expensive of the two. Following send_batch it continues staying very wide into __libc_sendmsg where it leaves the program entirely and is routed into the kernel. From this we can determine that the kernel overhead is by far the most CPU intensive cost.

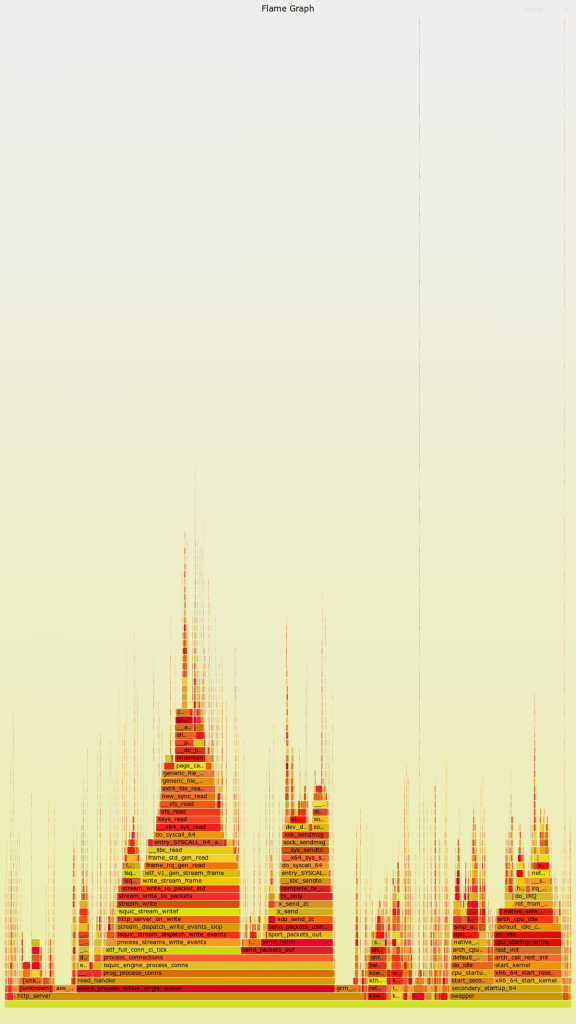

Flame Graph for XDP

Running the same perf run and FlameGraph with XDP results in:

This flamegraph has a much faster separation into narrower functions indicating that there are more contributors to CPU cost, in other words, not a single bottleneck. As above the widest row stays wide going into the program until send_packets out where it breaks into gquic_encrypt_packet and send_batch, where send_batch is the slightly wider function. The entry point into the kernel is __libc_sendto where it is barely the widest row at that depth indicating that CPU overhead of encryption is nearly as expensive as transmission.

This gives us an indication that the kernel overhead is substantially reduced in XDP and explains the performance improvements.

Conclusions

The goal of this blog post was to determine where the bottlenecks in QUIC and HTTP/3 are and to see if the XDP UDP interface could help in improving the overall performance. The results of the tests show quite convincingly that XDP reduces the kernel penalty of native UDP.

While XDP UDP is not a perfect solution for many environments as it is Linux specific it appears that for Linux web servers implementing XDP will result in higher overall performance.

Comments