Improve Performance with DPLPMTUD

Alphabet soup to the rescue!

Introduction

DPLPMTUD, described in the newly published RFC 8899, is a way for a transport protocol to figure out the maximum size of a single packet. QUIC, which performs packetization by itself, is the perfect use case for this mechanism. We implemented DPLPMTUD in our web server products and we see significant performance advantages.

Tame the Acronym

DPLPMTUD stands for Data Packetization Layer Path MTU Discovery, where MTU, of course, stands for Maximum Transmission Unit.

MTU is another way of saying “maximum packet size.”

MTU Discovery means figuring out what the maximum packet size is.

Having Path in the name reflects the fact that we care about the end-to-end (between client and server) MTU, not just between your browser and your wireless router.

The first three letters — DPL — describes when this algorithm is meant to be used. Because QUIC arranges data into packets by itself, a QUIC implementation is that layer.

How it Works

By default, the maximum size of QUIC packets is limited to 1252 bytes (or 1232 bytes on IPv6). This is done to increase the chances of a successful QUIC connection, for research has shown that there are paths on the Internet that will drop packets larger than a certain threshold.

The downside to using the lowest common denominator is that throughput on paths with larger PMTUs suffers unnecessarily. To cope with that, the QUIC Internet Draft recommends that endpoints use one of DPLPMTUD or PMTUD discovery mechanisms to find the optimal maximum packet size. For portability and simplicity, we chose to implement DPLPMTUD.

The main idea of DPLPMTUD is simple. Pick the maximum packet size that you would be willing to send (MAX_PLPMTU) and then search the space between the current PLPMTU and that maximum value. The search is performed by constructing and sending probe packets. These are packets whose size is larger than PLPMTU. If the peer acknowledges receipt of the probe, increase PLPMTU; otherwise, decrease the ceiling.

lsquic has two search modes, depending on the value of MAX_PLPMTU.

Search Mode 1

If MAX_PLPMTU is not specified, lsquic assumes that it is running on an Ethernet and will use MTU of 1500 bytes to calculate MAX_PLPMTU to be 1472 bytes (or 1452 bytes on IPv6). It then tries to use MAX_PLPMTU first, hoping for the best case that its initial guess was right. If the probe is acknowledged, then the maximum packet size is set to MAX_PLPMTU and the search ends.

If the probe is not acknowledged (and, following the RFC’s recommendation, lsquic sends three probes one second apart), lsquic switches to a binary search. The next probe will be of size that is midway between the current maximum packet size and MAX_PLPMTU. This search will end when lsquic has found PLPMTU with at least 99% precision.

Search Mode 2

When MAX_PLPMTU is specified, then lsquic starts to use the binary search immediately.

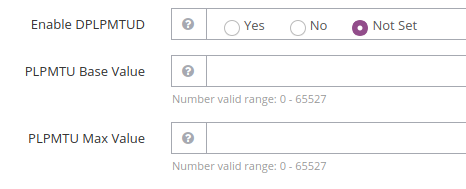

Web Server Settings

Performance Improvement

The performance gain of using larger packets is straightforward: fewer packets are needed to transfer the same amount of data. This has two positive effects:

- Both sender and receiver have fewer packets to keep track of. This means fewer packets to encrypt and fewer packets to acknowledge. (The sender having fewer acknowledgements to process is another beneficial effect.)

- Smaller network overhead, improving goodput.

The Numbers

The speed-up is most easily observed when the sender transmits a lot of data and uses close to 100% of CPU. To do this, I used a command-line HTTP/3 client from our lsquic GitHub library to open five parallel connections to our HTTP/3 server on the local interface and fetch a 1 GB file each. (Five is an arbitrary number that produces a good load on my workstation; another number of connections could be used.) Using NetEm, I set the delay to be 1 ms (for an RTT of 2 ms).

I tested three scenarios:

- DPLPMTUD is turned off on the server. This is as if DPLPMTUD were never implemented — maximum QUIC packet size is 1252 bytes.

- DPLPMTUD is turned on. This results in server connections probing for a maximum packet size of 1472 bytes.

- DPLPMTUD is turned on and the Maximum PLPMTU is set to 4096 bytes. This is not a realistic value to use on the Internet, but plausible on a local network.

The metric is the time it takes all five connections to fetch a 1 GB file from the HTTP/3 server. Each scenario is run three times and the median time is recorded.

| Scenario | Timing | Improvement |

| 1: No DPLPMTUD | 19.9 seconds | 0% (baseline) |

| 2: Default: PLPMTU is 1472 bytes | 17.7 seconds | 11% |

| 3: PLPMTU is 4096 bytes | 13.0 seconds | 35% |

Transferring data in 8/9th of the time just by changing the packet size is a pretty good deal. Outside of the lab, the performance gain may not be as dramatic, but it will be a significant improvement nonetheless.

Browser Support

The browsers do not need any configuration to take advantage of DPLPMTUD: it will just work. Chrome limits PLPMTU to 1472 bytes by sending the special max_udp_payload_size QUIC transport parameter. Firefox does not limit PLPMTU. In practical terms, in a default server setup, the effect will be the same on both these browsers.

Conclusion

DPLPMTUD is a neat and effective performance booster for a protocol that is not easy to optimize. Users of LiteSpeed products can take advantage of it today: DPLPMTUD support is included in OpenLiteSpeed 1.7.5 and the upcoming LiteSpeed Web Server 6.0 and is enabled by default. Following our pioneer tradition, we deliver cutting edge technology before anybody else.

Finding a good PLPMTU value quickly is the subject of ongoing research — both here at LiteSpeed and in the IETF community. Stay tuned, for we reserve the right to make our implementation even faster in the future!

Comments